Human Centric Information Processing Lab.

Graduate School of Interdisciplinary Science and Engineering in Health Systems, Okayama University

Research Theme

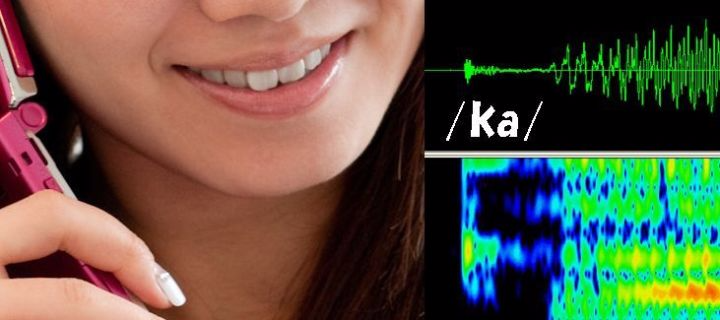

Speech

During the last decade, thanks to developments of probabilistic algorithms, large speech databases and high performance computer hardware, speech processing technologies such as speech recognition and speech synthesis have been dramatically improved. We can obtain considerable benefits from the technologies including car navigation and speech retrieval through smartphones. However, the performance is still far from those of human beings and users are not completely satisfied with them. To challenge the problem, we try to focus on higher level behaviors of human beings like emotion and speech acts.

Life log

Recently, the activities of our daily lives could be recorded electronically from various information sources. This is referred to as a life log, which is a massive electronic database of every activity, including cyberspace activities (e.g., web sites visited, keywords for searching, and e-mail content) and real-world activities (e.g., photos, videos, physical locations recorded via wearable GPS, and body movements captured by acceleration sensors). Our research goal is to develop user behavior models using the life log and adaptively optimize functionalities of services and systems for individual users.

Human interface

In big data eras, data mining algorithms play important roles to extract useful information from diverse and huge data. However, algorithms are not the only technology to utilize big data in depth. We believe one of the important technology is human interface that enables users to intuitively understand meaning of analyzed results in trial and error style. Currently, we are trying to visualize synchronously recorded data in life logs to support human memory and to visualize environmental sounds to understand urban life styles.

Recent publications

Publications

2023

Predictions for sound events and soundscape impressions from environmental sound using deep neural networks

- Proceedings of the 52nd International Congress and Exposition on Noise Control Engineering (Inter-Noise 2023), Paper ID 3-16-4, pp.5239–5250, Chiba, Japan, Aug. 2023. (Oral)

- doi: 10.3397/IN20230739

Abstract

In this study, we investigate methods for quantifying soundscape impressions, meaning pleasantness and eventfulness, from environmental sounds. From a point of view of Machine Learning (ML) research areas, acoustic scene classification (ASC) tasks and sound event classification (SEC) tasks are intensively studied and their results are helpful to consider soundscape impressions. In general, while most of ASCs and SECs use only sound for the classifications, a soundscape impression should not be perceived just from a sound but perceived from a sound with a landscape by human beings. Therefore, to establish automatic quantification of soundscape impressions, it should use other information such as landscape in addition to sound. First, we tackle to predict of two soundscape impressions using sound data collected by the cloud sensing method. For this purpose, we have proposed prediction method of soundscape impressions using environmental sounds and aerial photographs. Second, we also tackle to establish environmental sound classification using feature extractor trained by Variational Autoencoder (VAE). The feature extractor by VAE can be trained as unsupervised learning, therefore, it could be promising approaches for the growth dataset like as our cloud-sensing data collection schemes. Finally, we discuss about an integration for these methods.

Download Links: Ingenta Connect

Sound map of urban areas recorded by smart devices: case study at Okayama and Kurashiki

- Proceedings of the 52nd International Congress and Exposition on Noise Control Engineering (Inter-Noise 2023), Paper ID 1-11-18, pp.5227–5238, Chiba, Japan, Aug. 2023. (Oral)

- doi: 10.3397/IN20230738

Abstract

This paper presents a sound map to visualize environmental sounds collected using a cloud sensing approach, i.e. participatory sensing and opportunistic sensing. Our sound map have two major extra functions than a simple noise map which visualized sound levels. The first one is introduction of visualization of sound-type icons on the noise map to enrich information. The second one is animating a transition of sound levels on noise map to visible the change according to the time in a day as a simulation. To demonstrate our sound map and develop the functions, we carried out several experiments. First, we developed a sound collection system to simultaneously collect physical sounds, their statistics, and their subjective impression. Then, we conducted sound collection experiments using the developed system in Okayama city and Kurashiki city. We analyze the data to focus on the difference between the cities and the differences between the usual and the unusual days. Furthermore, the collected data included subjective impressions by participants. We try to predict bustle situations in sightseeing areas by two approaches; the first is using Gaussian Mixture Models, and the second is using i-vector, which is well-known approach in the speaker identification tasks.

Download Links: Ingenta Connect

2022

Prediction method of Soundscape Impressions using Environmental Sounds and Aerial Photographs

- Proceedings of APSIPA Annual Summit and Conference (APSIPA-ASC 2022), pp.1222–1227, Chiang Mai, Thailand, Nov. 2022. (Oral/Video presentation)

- doi: 10.1109/ICCE53296.2022.9730332

Abstract

We investigate an method for quantifying city characteristics based on impressions of a sound environment. The quantification of the city characteristics will be beneficial to government policy planning, tourism projects, etc. In this study, we try to predict two soundscape impressions, meaning pleasantness and eventfulness, using sound data collected by the cloud-sensing method. The collected sounds comprise meta information of recording location using Global Positioning System. Furthermore, the soundscape impressions and sound-source features are separately assigned to the cloud-sensing sounds by assessments defined using Swedish Soundscape-Quality Protocol, assessing the quality of the acoustic environment. The prediction models are built using deep neural networks with multi-layer perceptron for the input of 10-second sound and the aerial photographs of its location. An acoustic feature comprises equivalent noise level and outputs of octave-band filters every second, and statistics of them in 10 s. An image feature is extracted from an aerial photograph using ResNet-50 and autoencoder architecture. We perform comparison experiments to demonstrate the benefit of each feature. As a result of the comparison, aerial photographs and sound-source features are efficient to predict impression information. Additionally, even if the sound-source features are predicted using acoustic and image features, the features also show fine results to predict the soundscape impression close to the result of oracle sound-source features.

Download Links: IEEE Xplore | University's Repository (TBA) | Preprint (arXiv:2209.04077)

Incremental Audio Scene Classifier Using Rehearsal-Based Strategy

- Proceedings of IEEE 11th Global Conference on Consumer Electronics (GCCE 2022), pp.619–623, Osaka, Japan, Oct. 2022. (Oral/ONLINE PRESENTATION)

- doi: 10.1109/GCCE56475.2022.10014339

Abstract

Novel class incremental is a special case of concept drift, where a new class exists in future learning. To solve this problem, updating or retraining the model is needed to accommodate new knowledge into the model. However, when the model is retrained using the latest dataset, the performance gradually decreases because the model forgets the previous knowledge. Therefore, a straightforward solution is rehearsal-based learning with all of the past datasets, but some systems have a limitation on the amounts of data saved. This paper proposes a method for selecting the small portion of the past dataset as representative data, and then we use GAN and data augmentation to generate the samples as an extension of the rehearsal data. Experimental results show promising results, prevent catastrophic forgetting, and increase backward transfer. The model performs better when using a low logit sample than a high logit in selecting the representative data and GAN.

Download Links: TBD IEEE Xplore | University's Repository (TBA)

Speech-like Emotional Sound Generation using WaveNet

- IEICE Transactions on Information and Systems, pp.1581–1589, vol.E105-D, no.9, Sept. 2022.

- doi: 10.1587/transinf.2021EDP7236

Abstract

In this paper, we propose a new algorithm to generate Speech-like Emotional Sound (SES). Emotional expressions may be the most important factor in human communication, and speech is one of the most useful means of expressing emotions. Although speech generally conveys both emotional and linguistic information, we have undertaken the challenge of generating sounds that convey emotional information alone. We call the generated sounds “speech-like,” because the sounds do not contain any linguistic information. SES can provide another way to generate emotional response in human-computer interaction systems. To generate “speech-like” sound, we propose employing WaveNet as a sound generator conditioned only by emotional IDs. This concept is quite different from the WaveNet Vocoder, which synthesizes speech using spectrum information as an auxiliary feature. The biggest advantage of our approach is that it reduces the amount of emotional speech data necessary for training by focusing on non-linguistic information. The proposed algorithm consists of two steps. In the first step, to generate a variety of spectrum patterns that resemble human speech as closely as possible, WaveNet is trained with auxiliary mel-spectrum parameters and Emotion ID using a large amount of neutral speech. In the second step, to generate emotional expressions, WaveNet is retrained with auxiliary Emotion ID only using a small amount of emotional speech. Experimental results reveal the following: (1) the two-step training is necessary to generate the SES with high quality, and (2) it is important that the training use a large neutral speech database and spectrum information in the first step to improve the emotional expression and naturalness of SES.

Download Links: J-STAGE | University's Repository (TBA)

Concept drift adaptation for audio scene classification using high-level features

- Proceedings of 2022 IEEE International Conference on Consumer Electronics (ICCE), (MDA-V, no.2), 5 pages, Jan. 2022. (Oral/ONLINE PRESENTATION)

- doi: 10.1109/ICCE53296.2022.9730332

Abstract

Data used in the model training is assumed to have a similar distribution when the model is applied. However, in some applications, the data distributions may change over time. This condition, known as the concept drift, might decrease the model performance because the model is trained and evaluated in different distributions. To solve this problem in the audio scene classification task, we previously proposed the Combine-merge Gaussian mixture model (CMGMM) algorithm, where Mel-frequency cepstral coefficients (MFCCs) are used as the feature vector. In this paper, in the CMGMM algorithm, we propose to use the Pre-trained audio neural networks (PANNs) to model event audio that exists in the scene. A motivation is, instead of acoustic features, to make the best use of high-level features obtained by a model that trained using a large amount of audio data. The experiment result shows that the proposed method using PANNs improves model accuracy. In the active methods with abrupt and gradual concept drift, it is recommended to use PANNs to have significant accuracy improvement and obtain optimal adaptation results.

Download Links: IEEE Xplore | University's Repository (TBA)

2021

Acoustic Scene Classifier Based on Gaussian Mixture Model in the Concept Drift Situation

- Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 167–176, Sept. 2021.

- doi: 10.25046/aj060519

Abstract

The data distribution used in model training is assumed to be similar with that when the model is applied. However, in some applications, data distributions may change over time. This situation is called the concept drift, which might decrease the model performance because the model is trained and evaluated in different distributions. To solve this problem for scene audio classification, this study proposes the kernel density drift detection (KD3) algorithm to detect the concept drift and the combine–merge Gaussian mixture model (CMGMM) algorithm to adapt to the concept drift. The strength of the CMGMM algorithm is its ability to perform adaptation and continuously learn from stream data with a local replacement strategy that enables it to preserve previously learned knowledge and avoid catastrophic forgetting. KD3 plays an essential role in detecting the concept drift and supplying adaptation data to the CMGMM. Their performance is evaluated for four types of concept drift with three systematically generated scenarios. The CMGMM is evaluated with and without the concept drift detector. In summary, the combination of the CMGMM and KD3 outperforms two of four other combination methods and shows its best performance at a recurring concept drift.

Download Links: ASTES Journal | University's Repository (TBA)

Phonetic and Prosodic Information Estimation Using Neural Machine Translation for Genuine Japanese End-to-End Text-to-Speech

- Proceedings of Interspeech 2022, pp.126–130, Online/Virtual Conference (Brno, Czech Republic), Sept. 2021. (Oral/ONLINE PRESENTATION)

- doi: 10.21437/Interspeech.2021-914

Abstract

The biggest obstacle to develop end-to-end Japanese text-to-speech (TTS) systems is to estimate phonetic and prosodic information (PPI) from Japanese texts. The following are the reasons: (1) the Kanji characters of the Japanese writing system have multiple corresponding pronunciations, (2) there is no separation mark between words, and (3) an accent nucleus must be assigned at appropriate positions. In this paper, we propose to solve the problems by neural machine translation (NMT) on the basis of encoder-decoder models, and compare NMT models of recurrent neural networks and the Transformer architecture. The proposed model handles texts on token (character) basis, although conventional systems handle them on word basis. To ensure the potential of the proposed approach, NMT models are trained using pairs of sentences and their PPIs that are generated by a conventional Japanese TTS system from 5 million sentences. Evaluation experiments were performed using PPIs that are manually annotated for 5,142 sentences. The experimental results showed that the Transformer architecture has the best performance, with 98.0% accuracy for phonetic information estimation and 95.0% accuracy for PPI estimation. Judging from the results, NMT models are promising toward end-to-end Japanese TTS.

Download Links: ISCA Archive | University's Repository (TBA)

Model architectures to extrapolate emotional expressions in DNN-based text-to-speech

- Speech Communication, vol.126, pp.35–43, Feb. 2021. (Available online 24 November 2020)

Abstract

This paper proposes architectures that facilitate the extrapolation of emotional expressions in deep neural network (DNN)-based text-to-speech (TTS). In this study, the meaning of “extrapolate emotional expressions” is to borrow emotional expressions from others, and the collection of emotional speech uttered by target speakers is unnecessary. Although a DNN has potential power to construct DNN-based TTS with emotional expressions and some DNN-based TTS systems have demonstrated satisfactory performances in the expression of the diversity of human speech, it is necessary and troublesome to collect emotional speech uttered by target speakers. To solve this issue, we propose architectures to separately train the speaker feature and the emotional feature and to synthesize speech with any combined quality of speakers and emotions. The architectures are parallel model (PM), serial model (SM), auxiliary input model (AIM), and hybrid models (PM&AIM and SM&AIM). These models are trained through emotional speech uttered by few speakers and neutral speech uttered by many speakers. Objective evaluations demonstrate that the performances in the open-emotion test provide insufficient information. They make a comparison with those in the closed-emotion test, but each speaker has their own manner of expressing emotion. However, subjective evaluation results indicate that the proposed models could convey emotional information to some extent. Notably, the PM can correctly convey sad and joyful emotions at a rate of >60%.

Download Links: Science Direct | University's Repository (TBA)

2020

Module Comparison of Transformer-TTS for Speaker Adaptation based on Fine-tuning

- Proceedings of APSIPA Annual Summit and Conference 2020, pp.826–830, Online/Virtual Conference (Auckland, New Zealand), Dec. 2020. (Oral/ONLINE PRESENTATION)

Abstract

End-to-end text-to-speech (TTS) models have achieved remarkable results in recent times. However, the model requires a large amount of text and audio data for training. A speaker adaptation method based on fine-tuning has been proposed for constructing a TTS model using small scale data. Although these methods can replicate the target speaker's voice quality, synthesized speech includes the deletion and/or repetition of speech. The goal of speaker adaptation is to change the voice quality to match the target speaker's on the premise that adjusting the necessary modules will reduce the amount of data to be fine-tuned. In this paper, we clarify the role of each module in the Transformer-TTS process by not updating it. Specifically, we froze character embedding, encoder, layer predicting stop token, and loss function for estimating sentence ending. The experimental results showed the following: (1) fine-tuning the character embedding did not result in an improvement in the deletion and/or repetition of speech, (2) speech deletion increases if the encoder is not fine-tuned, (3) speech deletion was suppressed when the layer predicting stop token is not fine-tuned, and (4) there are frequent speech repetitions at sentence end when the loss function estimating sentence ending is omitted.

Download Links: IEEE Xplore | PDF(APSIPA) | University's Repository (TBA)

Concept Drift Adaptation for Acoustic Scene Classifier Based on Gaussian Mixture Model

- The 2020 IEEE Region 10 Conference (IEEE-TENCON 2020), pp.450–455, Online/Virtual Conference (Osaka, Japan), Nov. 2020.

Abstract

In non-stationary environments, data might change over time, leading to variations in the underlying data distributions. This phenomenon is called concept drift and it negatively impacts the performance of scene detection models due to them being trained and evaluated on data with different distributions. This paper presents a new algorithm for detecting and adapting to concept drifts based on combining the existing and new components Gaussian mixture model then merging it. The algorithm is equipped with a drift detector based on kernel density estimation enabling the algorithm to adapt to new data and generalize over old and new concepts well.

Download Links: IEEE Xplore | University's Repository (TBA)

Controlling the Strength of Emotions in Speech-Like Emotional Sound Generated by WaveNet

- Proceedings of Interspeech 2020, pp.3421–3425, Online/Virtual Conference (Shanghai, China), Oct. 2020. (Oral/ONLINE PRESENTATION)

Abstract

This paper proposes a method to enhance the controllability of a Speech-like Emotional Sound (SES). In our previous study, we proposed an algorithm to generate SES by employing WaveNet as a sound generator and confirmed that SES can successfully convey emotional information. The proposed algorithm generates SES using only emotional IDs, which results in having no linguistic information. We call the generated sounds “speech-like” because they sound as if they are uttered by human beings although they contain no linguistic information. We could synthesize natural sounding acoustic signals that are fairly different from vocoder sounds to make the best use of WaveNet. To flexibly control the strength of emotions, this paper proposes to use a state of voiced, unvoiced, and silence (VUS) as auxiliary features. Three types of emotional speech, namely, neutral, angry, and happy, were generated and subjectively evaluated. Experimental results reveal the following: (1) VUS can control the strength of SES by changing the durations of VUS states, (2) VUS with narrow F0 distribution can express stronger emotions than that with wide F0 distribution, and (3) the smaller the unvoiced percentage is, the stronger the emotional impression is.

Download Links: ISCA Archive | University's Repository (TBA)

Semi-Supervised Speaker Adaptation for End-to-End Speech Synthesis with Pretrained Models

- ICASSP 2020, pp. 7634–7638, Barcelona, Spain, May 2020. (Oral/ONLINE PRESENTATION)

Abstract

Recently, end-to-end text-to-speech (TTS) models have achieved a remarkable performance, however, requiring a large amount of paired text and speech data for training. On the other hand, we can easily collect unpaired dozen minutes of speech recordings for a target speaker without corresponding text data. To make use of such accessible data, the proposed method leverages the recent great success of state-of-the-art end-to-end automatic speech recognition (ASR) systems and obtains corresponding transcriptions from pretrained ASR models. Although these models could only provide text output instead of intermediate linguistic features like phonemes, end-to-end TTS can be well trained with such raw text data directly. Thus, the proposed method can greatly simplify a speaker adaptation pipeline by consistently employing end-to-end ASR/TTS ecosystems. The experimental results show that our proposed method achieved comparable performance to a paired data adaptation method in terms of subjective speaker similarity and objective cepstral distance measures.

Download Links: IEEE Xplore | University's Repository (TBA)

Espnet-TTS: Unified, Reproducible, and Integratable Open Source End-to-End Text-to-Speech Toolkit

- Tomoki Hayashi, Ryuichi Yamamoto, Katsuki Inoue, Takenori Yoshimura, Shinji Watanabe, Tomoki Toda, Kazuya Takeda, Yu Zhang, Xu Tan

- ICASSP 2020, pp. 7654–7658, Barcelona, Spain, May 2020. (Oral/ONLINE PRESENTATION)

Abstract

Recently, end-to-end text-to-speech (TTS) models have achieved a remarkable performance, however, requiring a large amount of paired text and speech data for training. On the other hand, we can easily collect unpaired dozen minutes of speech recordings for a target speaker without corresponding text data. To make use of such accessible data, the proposed method leverages the recent great success of state-of-the-art end-to-end automatic speech recognition (ASR) systems and obtains corresponding transcriptions from pretrained ASR models. Although these models could only provide text output instead of intermediate linguistic features like phonemes, end-to-end TTS can be well trained with such raw text data directly. Thus, the proposed method can greatly simplify a speaker adaptation pipeline by consistently employing end-to-end ASR/TTS ecosystems. The experimental results show that our proposed method achieved comparable performance to a paired data adaptation method in terms of subjective speaker similarity and objective cepstral distance measures.

Download Links: IEEE Xplore | Preprint(arXiv:1910.10909)

2019

Speech-like Emotional Sound Generator by WaveNet

- APSIPA ASC 2019, pp. 143–147, Lanzhou, China, Nov. 2019. (Oral)

Abstract

In this paper, we propose a new algorithm to generate Speech-like Emotional Sound (SES). Emotional information plays an important role in human communication, and speech is one of the most useful media to express emotions. Although, in general, speech conveys emotional information as well as linguistic information, we have undertaken the challenge to generate sounds that convey emotional information without linguistic information, which results in making conversations in human-machine interactions more natural in some situations by providing non-verbal emotional vocalizations. We call the generated sounds “speech-like”, because the sounds do not contain any linguistic information. For the purpose, we propose to employ WaveNet as a sound generator conditioned by only emotional IDs. The idea is quite different from WaveNet Vocoder that synthesizes speech using spectrum information as auxiliary features. The biggest advantage of the idea is to reduce the amount of emotional speech data for the training. The proposed algorithm consists of two steps. In the first step, WaveNet is trained to obtain phonetic features using a large speech database, and in the second step, WaveNet is re-trained using a small amount of emotional speech. Subjective listening evaluations showed that the SES could convey emotional information and was judged to sound like a human voice.

Download Links: APSIPA Archive | University's Repository

DNN-based Voice Conversion with Auxiliary Phonemic Information to Improve Intelligibility of Glossectomy Patients' Speech

- APSIPA ASC 2019, pp. 138–142, Lanzhou, China, Nov. 2019. (Oral)

Abstract

In this paper, we propose using phonemic information in addition to acoustic features to improve the intelligibility of speech uttered by patients with articulation disorders caused by a wide glossectomy. Our previous studies showed that voice conversion algorithm improves the quality of glossectomy patients' speech. However, losses in acoustic features of glossectomy patients’ speech are so large that the quality of the reconstructed speech is low. To solve this problem, we explored potentials of several additional information to improve speech intelligibility. One of the candidates is phonemic information, more specifically Phoneme Labels as Auxiliary input (PLA). To combine both acoustic features and PLA, we employed a DNN-based algorithm. PLA is represented by a kind of one-of-k vector, i.e., PLA has a weight value (<1.0) that gradually changes in time axis, whereas one-of-k has a binary value (0 or 1). The results showed that the proposed algorithm reduced the mel-frequency cepstral distortion for all phonemes, and almost always improved intelligibility. Notably, the intelligibility was largely improved in phonemes /s/ and /z/, mainly because the tongue is used to sustain constriction to produces these phonemes. This indicates that PLA works well to compensate the lack of a tongue.

Download Links: APSIPA Archive | University's Repository

A signal processing perspective on human gait: Decoupling walking oscillations and gestures

- Adrien Gregorj, Zeynep Yücel, Sunao Hara, Akito Monden, Masahiro Shiomi

- The 4th International Conference on Interactive Collaborative Robotics (ICR 2019), pp.75–85,Istanbul, Turkey, Aug. 2019. (Oral)

Abstract

This study focuses on gesture recognition in mobile interaction settings, i.e. when the interacting partners are walking. This kind of interaction requires a particular coordination, e.g. by staying in the field of view of the partner, avoiding obstacles without disrupting group composition and sustaining joint attention during motion. In literature, various studies have proven that gestures are in close relation in achieving such goals.

Thus, a mobile robot moving in a group with human pedestrians, has to identify such gestures to sustain group coordination. However, decoupling of the inherent -walking- oscillations and gestures, is a big challenge for the robot. To that end, we employ video data recorded in uncontrolled settings and detect arm gestures performed by human-human pedestrian pairs by adopting a signal processing approach. Namely, we exploit the fact that there is an inherent oscillatory motion at the upper limbs arising from the gait, independent of the view angle or distance of the user to the camera. We identify arm gestures as disturbances on these oscillations. In doing that, we use a simple pitch detection method from speech processing and assume data involving a low frequency periodicity to be free of gestures. In testing, we employ a video data set recorded in uncontrolled settings and show that we achieve a detection rate of 0.80.

Download Links: Springer | University's Repository (TBA)

2018

Naturalness Improvement Algorithm for Reconstructed Glossectomy Patient's Speech Using Spectral Differential Modification in Voice Conversion

- Interspeech 2018,pp. 2464–2468, Hyderabad, India, Sept. 2018. (Poster)

Abstract

In this paper, we propose an algorithm to improve the naturalness of the reconstructed glossectomy patient's speech that is generated by voice conversion to enhance the intelligibility of speech uttered by patients with a wide glossectomy. While existing VC algorithms make it possible to improve intelligibility and naturalness, the result is still not satisfying. To solve the continuing problems, we propose to directly modify the speech waveforms using a spectrum differential. The motivation is that glossectomy patients mainly have problems in their vocal tract, not in their vocal cords. The proposed algorithm requires no source parameter extractions for speech synthesis, so there are no errors in source parameter extractions and we are able to make the best use of the original source characteristics. In terms of spectrum conversion, we evaluate with both GMM and DNN. Subjective evaluations show that our algorithm can synthesize more natural speech than the vocoder-based method. Judging from observations of the spectrogram, power in high-frequency bands of fricatives and stops is reconstructed to be similar to that of natural speech.

Download Links: ISCA Archive University's Repository

2017

An Investigation to Transplant Emotional Expressions in DNN-based TTS Synthesis

- APSIPA Annual Summit and Conference 2017, TP-P4.9, 6 pages, Kuala Lumpur, Malaysia, Dec. 2017. (Poster)

Abstract

In this paper, we investigate deep neural network(DNN) architectures to transplant emotional expressions to improve the expressiveness of DNN-based text-to-speech (TTS) synthesis. DNN is expected to have potential power in mapping between linguistic information and acoustic features. From multispeaker and/or multi-language perspectives, several types of DNN architecture have been proposed and have shown good performances. We tried to expand the idea to transplant emotion, constructing shared emotion-dependent mappings. The following three types of DNN architecture are examined; (1) the parallel model (PM) with an output layer consisting of both speakerdependent layers and emotion-dependent layers, (2) the serial model (SM) with an output layer consisting of emotion-dependent layers preceded by speaker-dependent hidden layers, (3) the auxiliary input model (AIM) with an input layer consisting of emotion and speaker IDs as well as linguistics feature vectors. The DNNs were trained using neutral speech uttered by 24 speakers, and sad speech and joyful speech uttered by 3 speakers from those 24 speakers. In terms of unseen emotional synthesis, subjective evaluation tests showed that the PM performs much better than the SM and slightly better than the AIM. In addition, this test showed that the SM is the best of the three models when training data includes emotional speech uttered by the target speaker.

Download Links: IEEE Xplore | APSIPA Archive | University's Repository (TBA)

Sound sensing using smartphones as a crowdsourcing approach

- Sunao Hara, Asako Hatakeyama, Shota Kobayashi, and Masanobu Abe

- APSIPA Annual Summit and Conference 2017, FA-02.2, 6 pages, Kuala Lumpur, Malaysia, Dec. 2017. (Oral)

Abstract

Sounds are one of the most valuable information sources for human beings from the viewpoint of understanding the environment around them. We have been now investigating the method of detecting and visualizing crowded situations in the city in a sound-sensing manner. For this purpose, we have developed a sound collection system oriented to a crowdsourcing approach and carried out the sound-collection in two Japanese cities, Okayama and Kurashiki. In this paper, we present an overview of sound collections. Then, to show an effectiveness of analyzation by sensed sounds, we profile characteristics of the cities through the visualization results of the sound.

Download Links: IEEE Xplore | APSIPA Archive | University's Repository (TBA)

New monitoring scheme for persons with dementia through monitoring-area adaptation according to stage of disease

- Shigeki Kamada, Yuji Matsuo, Sunao Hara and Masanobu Abe,

- ACM SIGSPATIAL Workshop on Recommendations for Location-based Services and Social Networks (LocalRec), California, USA, Nov. 2017.

Abstract

In this paper, we propose a new monitoring scheme for a person with dementia (PwD). The novel aspect of this monitoring scheme is that the size of the monitoring area changes for different stages of dementia, and the monitoring area is automatically generated using global positioning system (GPS) data collected by the PwD. The GPS data are quantized using the GeoHex code, which breaks down the map of the entire world into regular hexagons. The monitoring area is defined as a set of GeoHex codes, and the size of the monitoring area is controlled by the granularity of hexagons in the GeoHex code. The stages of dementia are estimated by analyzing the monitoring area to determine how frequently the PwD wanders. In this paper, we also examined two aspects of the implementation of the proposed scheme. First, we proposed an algorithm to estimate the monitoring area and evaluate its performance. The experimental results showed that the proposed algorithm can estimate the monitoring area with a precision of 0.82 and recall of 0.86 compared with the ground truth. Second, to investigate privacy considerations, we showed that different persons have different preferences for the granularity of the hexagons in the monitoring systems. The results indicate that the size of the monitoring area also should be changed for PwDs.

Prediction of subjective assessments for a noise map using deep neural networks

- Shota Kobayashi, Sunao Hara, Masanobu Abe

- Proceedings of UbiComp/ISWC 2017 Adjunct, pp.113–116, Hawaii, Sept. 2017.

Abstract

In this paper, we investigate a method of creating noise maps that take account of human senses. Physical measurements are not enough to design our living environment and we need to know subjective assessments. To predict subjective assessments from loudness values, we propose to use metadata related to where, who and what is recording. The proposed method is implemented using deep neural networks because these can naturally treat a variety of information types. First, we evaluated its performance in predicting five-point subjective loudness levels based on a combination of several features: location-specific, participant-specific, and sound-specific features. The proposed method achieved a 16.3 point increase compared with the baseline method. Next, we evaluated its performance based on noise map visualization results. The proposed noise maps were generated from the predicted subjective loudness level. Considering the differences between the two visualizations, the proposed method made fewer errors than the baseline method.

Speaker Dependent Approach for Enhancing a Glossectomy Patient's Speech via GMM-based Voice Conversion

- Kei Tanaka, Sunao Hara, Masanobu Abe, Masaaki Sato, Shogo Minagi

- Proceedings of Interspeech 2017, pp. 3384–3388, Stockholm, Sweden, Aug. 2017.

Abstract

In this paper, using GMM-based voice conversion algorithm, we propose to generate speaker-dependent mapping functions to improve the intelligibility of speech uttered by patients with a wide glossectomy. The speaker-dependent approach enables to generate the mapping functions that reconstruct missing spectrum features of speech uttered by a patient without having influences of a speaker's factor. The proposed idea is simple, i.e., to collect speech uttered by a patient before and after the glossectomy, but in practice it is hard to ask patients to utter speech just for developing algorithms. To confirm the performance of the proposed approach, in this paper, in order to simulate glossectomy patients, we fabricated an intraoral appliance which covers lower dental arch and tongue surface to restrain tongue movements. In terms of the Mel-frequency cepstrum (MFC) distance, by applying the voice conversion, the distances were reduced by 25% and 42% for speakerdependent case and speaker-independent case, respectively. In terms of phoneme intelligibility, dictation tests revealed that speech reconstructed by speaker-dependent approach almost always showed better performance than the original speech uttered by simulated patients, while speaker-independent approach did not.

2016

Kei Tanaka, Sunao Hara, Masanobu Abe, Shogo Minagi," Enhancing a Glossectomy Patient's Speech via GMM-based Voice Conversion," APSIPA Annual Summit and Conference 2016 (2016.12)

In this paper, we describe the use of a voice conversion algorithm for improving the intelligibility of speech by patients with articulation disorders caused by a wide glossectomy and/or segmental mandibulectomy. As a first trial, to demonstrate the diff iculty of the task at hand, we implemented a conventional Gaussian mixture model (GMM) - based algorithm using a frame - by - frame approach. We compared voice conversion performance among normal speakers and one with an articulation disorder by measuring the nu mber of training sentences, the number of GMM mixtures, and the variety of speaking styles of training speech. According to our experi ment results, the mel-cepstrum (MC) distance was decreased by 40% in all pairs of speakers as compared with that of pre-conversion measures; however, at post - conversion, the MC distance between a pair of a glossectomy speaker and a normal speaker was 28% larger than that between pairs of normal speakers. The analysis of resulting spectrograms showed that the voice conversion algorithm successfully reconstructed high-frequency spectra in phonemes /h/, /t/, /k/, /ts/, and /ch/; we also confirmed improvements of speech intelligibility via informal listening tests.

Atsuya Namba, Sunao Hara, Masanobu Abe,"LiBS:Lifelog browsing system to support sharing of memories," Proc. UbiComp/ISWC 2016 Adjunct. (2016.9)

We propose a lifelog browsing system through which users can share memories of their experiences with other users. Most importantly, by using global positioning system data and time stamps, the system simultaneously displays a va- riety of log information in a time-synchronous manner. This function empowers users with not only an easy interpreta- tion of other users’ experiences but also nonverbal notifi- cations. Shared information on this system includes pho- tographs taken by users, Google street views, shops and restaurants on the map, daily weather, and other items rel- evant to users’ interests. In evaluation experiments, users preferred the proposed system to conventional photograph albums and maps for explaining and sharing their experi- ences. Moreover, through displayed information, the listen- ers found out their interest items that had not been men- tioned by the speakers.

Shigeki Kamada, Sunao Hara, Masanobu Abe," Safety vs. Privacy: User Preferences from the Monitored and Monitoring Sides of a Monitoring System" Proc. UbiComp/ISWC 2016 Adjunct. (2016.9)

In this study, in order to develop a monitoring system that takes into account privacy issues, we investigated user preferences in terms of the monitoring and privacy protec- tion levels. The people on the monitoring side wanted the monitoring system to allow them to monitor in detail. Con- versely, it was observed for the people being monitored that the more detailed the monitoring, the greater the feelings of being surveilled intrusively. Evaluation experiments were performed using the location data of three people in differ- ent living areas. The results of the experiments show that it is possible to control the levels of monitoring and privacy protection without being affected by the shape of a living area by adjusting the quantization level of location informa- tion. Furthermore, it became clear that the granularity of location information satisfying the people on the monitored side and the monitoring side is different.

2015

Masaki Yamaoka, Sunao Hara, Masanobu Abe, ''A Spoken Dialog System with Redundant Response to Prevent User Misunderstanding,'' in Proceedings of APSIPA Annual Summit and Conference 2015, pp.223--226, Hog Kong, Dec. 2015.

We propose a spoken dialog strategy for car naviga- tion systems to facilitate safe driving. To drive safely, drivers need to concentrate on their driving; however, their concentration may be disrupted due to disagreement with their spoken dialog system. Therefore, we need to solve the problems of user misunderstandings as well as misunderstanding of spoken dialog systems. For this purpose, we introduced a driver workload level in spoken dialog management in order to prevent user misunderstandings. A key strategy of the dialog management is to make speech redundant if the driver’s workload is too high in assuming that the user probably misunderstand the system utterance under such a condition. An experiment was conducted to compare performances of the proposed method and a conventional method using a user simulator. The simulator is developed under the assumption of two types of drivers: an experienced driver model and a novice driver model. Experimental results showed that the proposed strategies achieved better performance than the conventional one for task completion time, task completion rate, and user’s positive speech rate. In particular, these performance differences are greater for novice users than for experienced users.

Tadashi Inai, Sunao Hara, Masanobu Abe, Yusuke Ijima, Noboru Miyazaki and Hideyuki Mizuno, ''A Sub-Band Text-to-Speech by Combining Sample-Based Spectrum with Statistically Generated Spectrum,'' in Proceedings of Interspeech 2015, pp.264--268, Dresden, Germany, Sept. 2015.

As described in this paper, we propose a sub-band speech synthesis approach to develop a high quality Text-to-Speech (TTS) system: a sample-based spectrum is used in the high-frequency band and spectrum generated by HMM-based TTS is used in the low-frequency band. Herein, sample-based spectrum means spectrum selected from a phoneme database such that it is the most similar to spectrum generated by HMM-based speech synthesis. A key idea is to compensate over-smoothing caused by statistical procedures by introducing a sample-based spectrum, especially in the high-frequency band. Listening test results show that the proposed method has better performance than HMM-based speech synthesis in terms of clarity. It is at the same level as HMM-based speech synthesis in terms of smooth- ness. In addition, preference test results among the proposed method, HMM-based speech synthesis, and waveform speech synthesis using 80 min speech data reveal that the proposed method is the most liked. index term: HMM-based speech synthesis, Sub-band,

Akinori Kasai, Sunao Hara, Masanobu Abe, ''Extraction of key segments from day-long sound data,'' HCI International 2015, Los Angels, CA, USA, Aug. 2015.

We propose a method to extract particular sound segments from the sound recorded during the course of a day in order to provide sound segments that can be used to facilitate memory. To extract important parts of the sound data, the proposed method utilizes human behavior based on a multi sensing approach. To evaluate the performance of the proposed method, we conducted experiments using sound, acceleration, and global positioning system data collected by five participants for approximately two weeks. The experimental results are summarized as follows: (1) various sounds can be extracted by dividing a day into scenes using the acceleration data; (2) sound recorded in unusual places is preferable to sound recorded in usual places; and (3) speech is preferable to non speech sound.

Yuji Matsuo, Sunao Hara, Masanobu Abe, ''Algorithm to estimate a living area based on connectivity of places with home,'' HCI International 2015, Los Angels, CA, USA, Aug. 2015.

We propose an algorithm to estimate a person’s living area using his/her collected Global Positioning System (GPS) data. The most important feature of the algorithm is the connectivity of places with a home, i.e., a living area must consist of a home, important places, and routes that connect them. This definition is logical because people usually go to a place from home, and there can be several routes to that place. Experimental results show that the proposed algorithm can estimate living area with a precision of 0.82 and recall of 0.86 compared with the grand truth established by users. It is also confirmed that the connectivity of places with a home is necessary to estimate a reasonable living area.

Sunao Hara, Masanobu Abe, Noboru Sonehara, “Sound collection and visualization system enabled participatory and opportunistic sensing approaches,” in Proceedings of 2nd International Workshop on Crowd Assisted Sensing, Pervasive Systems and Communications (CASPer-2015), Workshop of PerCom 2015, pp.394–399, St. Louis, Missoouri, USA, March 2015.

This paper presents a sound collection system to visualize environmental sounds that are collected using a crowd- sourcing approach. An analysis of physical features is generally used to analyze sound properties; however, human beings not only analyze but also emotionally connect to sounds. If we want to visualize the sounds according to the characteristics of the listener, we need to collect not only the raw sound, but also the subjective feelings associated with them. For this purpose, we developed a sound collection system using a crowdsourcing approach to collect physical sounds, their statistics, and subjective evaluations simultaneously. We then conducted a sound collection experiment using the developed system on ten participants.We collected 6,257 samples of equivalent loudness levels and their locations, and 516 samples of sounds and their locations. Subjective evaluations by the participants are also included in the data. Next, we tried to visualize the sound on a map. The loudness levels are visualized as a color map and the sounds are visualized as icons which indicate the sound type. Finally, we conducted a discrimination experiment on the sound to implement a function of automatic conversion from sounds to appropriate icons. The classifier is trained on the basis of the GMM-UBM (Gaussian Mixture Model and Universal Background Model) method. Experimental results show that the F-measure is 0.52 and the AUC is 0.79.

Masanobu Abe, Akihiko Hirayama, Sunao Hara, “Extracting Daily Patterns of Human Activity Using Non-Negative Matrix Factorization,” in Proceedings of IEEE International Conference on Consumer Electronics (IEEE-ICCE 2015), pp. 36–39, Las Vegas, USA, Jan. 2015.

This paper presents an algorithm to mine basic patterns of human activities on a daily basis using non-negative matrix factorization (NMF). The greatest benefit of the algorithm is that it can elicit patterns from which meanings can be easily interpreted. To confirm its performance, the proposed algorithm was applied to PC logging data collected from three occupations in offices. Daily patterns of software usage were extracted for each occupation. Results show that each occupation uses specific software in its own time period, and uses several types of software in parallel in its own combinations. Experiment results also show that patterns of 144 dimension vectors were compressible to those of 11 dimension vectors without degradation in occupation classification performance. Therefore, the proposed algorithm compressed basic software usage patterns to about one-tenth of their original dimensions while preserving the original information. Moreover, the extracted basic patterns showed reasonable interpretation of daily working patterns in offices.

2014

Takuma Inoue, Sunao Hara, Masanobu Abe, “A Hybrid Text-to-Speech Based on Sub-Band Approach,” in Proceedings of APSIPA ASC 2014, FA-P-3, 4 pages, Cambodia, Dec. 2014.

This paper proposes a sub-band speech synthesis approach to develop high-quality Text-to-Speech (TTS). For the low-frequency band and high-frequency band, Hidden Markov Model (HMM)-based speech synthesis and waveform-based speech synthesis are used, respectively. Both speech synthesis methods are widely known to show good performance and to have benefits and shortcomings from different points of view. One motivation is to apply the right speech synthesis method in the right frequency band. Experiment results show that in terms of the smoothness the proposed approach shows better performance than waveform-based speech synthesis, and in terms of the clarity it shows better than HMM-based speech synthesis. Consequently, the proposed approach combines the inherent benefits from both waveform-based speech synthesis and HMM-based speech synthesis.

Masanobu Abe, Daisuke Fujioka, Kazuto Hamano, Sunao Hara, Rika Mochizuki, Tomoki Watanabe, “New Approach to Emotional Information Exchange: Experience Metaphor Based on Life Logs,” in Proceedings of PerCom 2014, Work-in-Progress session, 4 pages, Budapest, Hungary, March 2014.

We are striving to develop a new communication technology based on individual experiences that can be pulled out from life logs. We have proposed the “Emotion Communication Model” and confirmed that significant correlation exists between experience and emotion. As the second step, particularly addressing impressive places and events, this paper investigates the extent to which we can share emotional information with others through individuals' experiences. Subjective experiments were conducted using life log data collected during 7-47 months. Experiment results show that (1) impressive places are determined by the distance from home, visit frequency, and direction from home and that (2) positive emotional information is highly consistent among people (71.4%), but it is not true for negative emotional information. Therefore, experiences are useful as metaphors to express positive emotional information.

2012

Masanobu Abe, Daisuke Fujioka, Hisashi Handa, “A life log collecting system supported by smartphone to model higher-level human behaviors,” Proc. 2012 Sixth International Conference on Complex, Intelligent, and Software Intensive Systems (CISIS), pp.665-670. July 2012.

In this paper, we propose a system to collect human behavior in detail with higher-level tags such as attitude, accompanying-person, expenditure, tasks and so on. The system makes it possible to construct database to model higher-level human behaviors that are utilized in context-aware services. The system has two input methods; i.e., on-the-fly by a smartphone and post-processing by a PC browser. On the PC, users can interactively know where and when they were, which results in easily and accurately constructing human behavior database. To decrease numbers of operations, users’ repetitions and similarity of among users are used. According to experiment results, the system successfully decreases operation time, higher-level tags are properly collected and behavior tendencies of the users are clearly captured.

Member

Update Apr. 8, 2024

Graduate Students (M2)

- ICHIKAWA, Natsuki (

n_ichi)

Graduate Students (M1)

- IWASAKI, Matsuri (

m_iwa) - OKAMURA, Masayori (

m_oka) - HIROHATA, Kazuto (

k_hiro)

Undergraduate Student (B4)

- SENO, Kazuma (

k_seno) - FUJITA, Kohei (

k_fuji) - YAMAMOTO, Kazunari (

k_yama) - TANAKA, Takahiro (

t_tana)